NVIDIA just launched the TU102/TU104 (GeForce RTX 2080ti/2080), first GPUs based on the Turing architecture. This new architecture brings hardware ray-tracing acceleration, as well as many other new and really cool graphics features. A good high-level overview of the architecture and new graphics features can be found in the Turing Architecture whitepaper as well as this blog post. Most of these features are exposed through both Vulkan and OpenGL extensions, and I will quickly go through each of them in this post. A big thanks to the many people at NVIDIA who worked hard to provide us with these extensions !

NVIDIA just launched the TU102/TU104 (GeForce RTX 2080ti/2080), first GPUs based on the Turing architecture. This new architecture brings hardware ray-tracing acceleration, as well as many other new and really cool graphics features. A good high-level overview of the architecture and new graphics features can be found in the Turing Architecture whitepaper as well as this blog post. Most of these features are exposed through both Vulkan and OpenGL extensions, and I will quickly go through each of them in this post. A big thanks to the many people at NVIDIA who worked hard to provide us with these extensions !Most features split into a Vulkan or OpenGL -specific extension (GL_*/VK_*), and a GLSL or SPIR-V shader extension (GLSL_*/SPV_*).

Ray-Tracing Acceleration

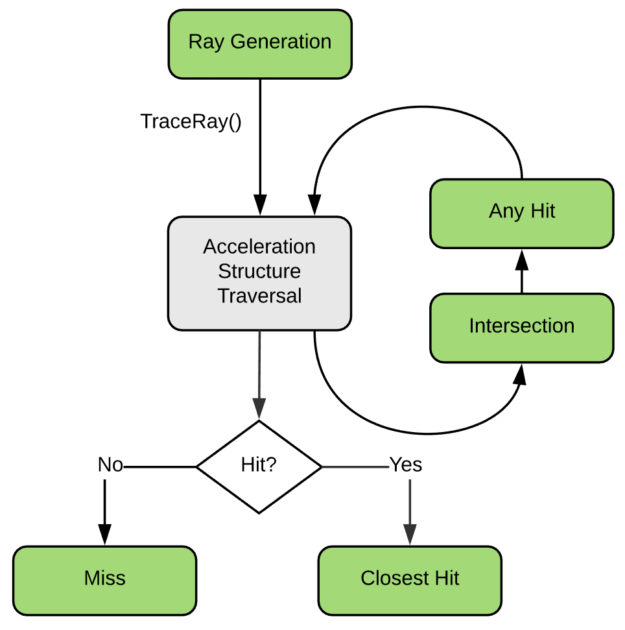

Turing brings hardware acceleration for ray-tracing through dedicated units called RT cores. The RT cores provide BVH traversal as well as ray-triangle intersection. This acceleration is exposed in Vulkan through a new ray-tracing pipeline, associated with a series of new shader stages. This programming model maps the DXR (DirectX Ray-Tracing) model, which is quickly described in this blog post, and this blog post details the Vulkan implementation.

Turing brings hardware acceleration for ray-tracing through dedicated units called RT cores. The RT cores provide BVH traversal as well as ray-triangle intersection. This acceleration is exposed in Vulkan through a new ray-tracing pipeline, associated with a series of new shader stages. This programming model maps the DXR (DirectX Ray-Tracing) model, which is quickly described in this blog post, and this blog post details the Vulkan implementation.This blog post details

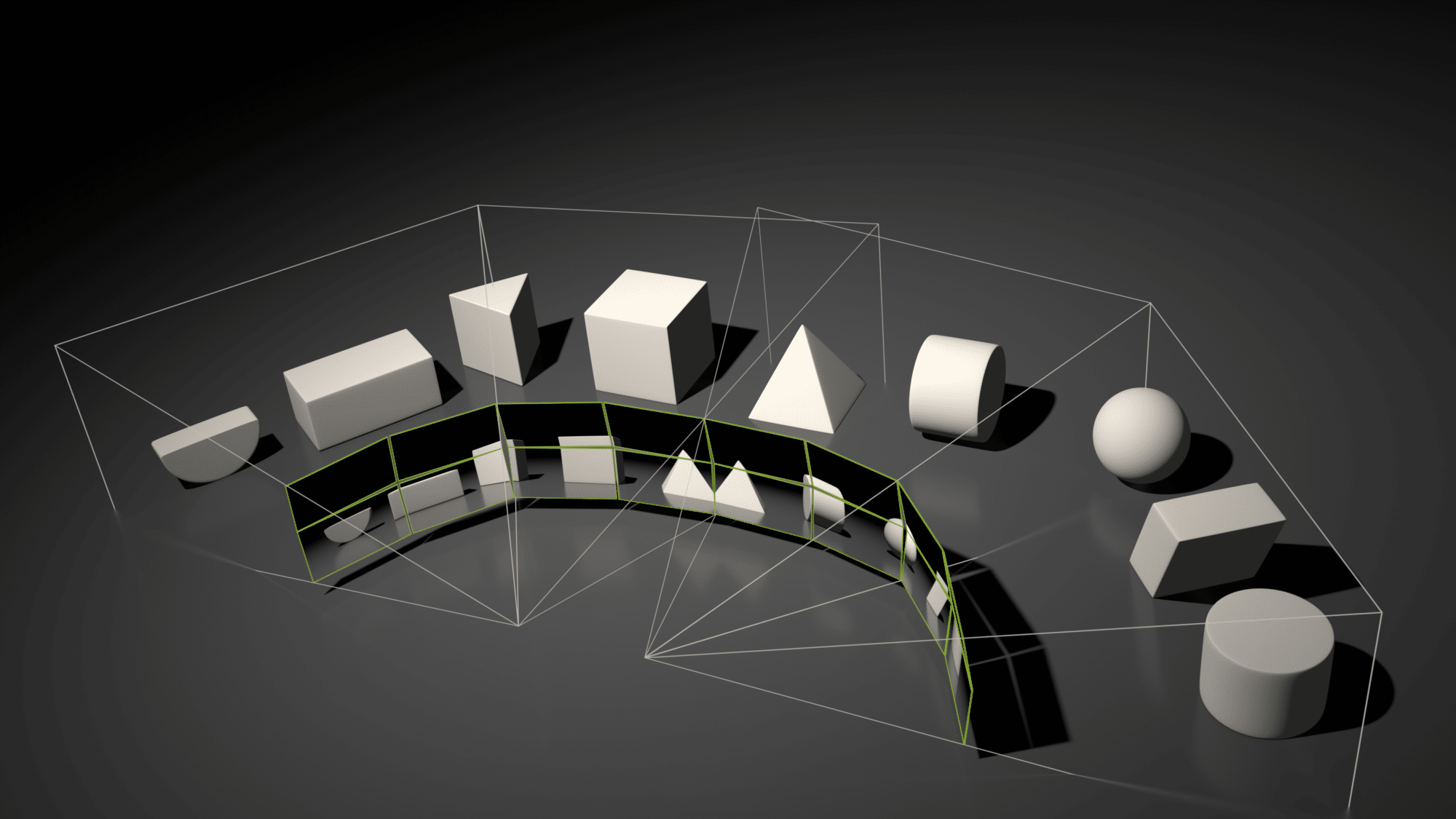

Mesh Shading

This is a new programmable geometry pipeline which replaces the traditional VS/HS/DS/GS pipeline with basically a Compute-based programming model. This new pipeline is based on two shader stages, a Task Shader and a Mesh Shader (separated by an expansion stage), which are used to ultimately generate a compact mesh description called a Meshlet. A Meshlet is a mini indexed geometry representation which is maintained on chip and is directly fed to the rasterizer for consumption. This exposes a very flexible and very efficient model with Compute Shader features and generic cooperative thread groups (workgroups, shared memory, barrier synchronizations...). Applications are endless, and this can for instance be used to implement efficient culling or LOD schemes, or perform procedural geometry generation.

Many details can be found in this excellent blog post by Christoph Kubisch: https://devblogs.nvidia.com/introduction-turing-mesh-shaders/

A full OpenGL sample code which implements a compute-based adaptive tessellation technique can also be found there: https://github.com/jdupuy/opengl-framework/tree/master/demo-isubd-terrain

Variable Rate Shading

- VK_NV_shading_rate_image / GL_NV_shading_rate_image (GLSL_NV_shading_rate_image / SPV_NV_shading_rate)

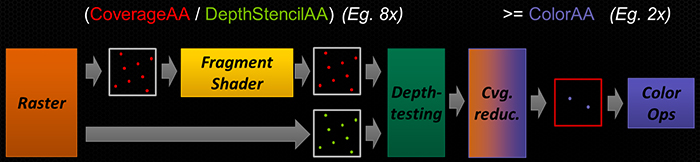

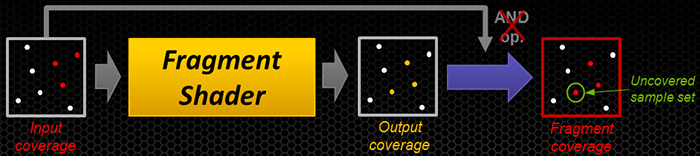

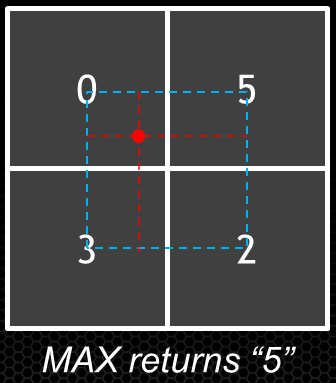

This is a very powerful hardware feature which allows the application to dynamically control the number of fragment shader invocations (independently of the visibility rate) and vary this shading rate across the framebuffer. The shading rate is controlled using a texture image ("Shading Rate Image", 8b/texel) where each texel specifies an independent shading rate for blocks of 16x16 pixels. The rate is actually specified indirectly

using 8b indices into a palette which is specified per-viewport and stores the actual

shading rate flags.

This is a very powerful hardware feature which allows the application to dynamically control the number of fragment shader invocations (independently of the visibility rate) and vary this shading rate across the framebuffer. The shading rate is controlled using a texture image ("Shading Rate Image", 8b/texel) where each texel specifies an independent shading rate for blocks of 16x16 pixels. The rate is actually specified indirectly

using 8b indices into a palette which is specified per-viewport and stores the actual

shading rate flags.The GLSL extensions also exposes intrinsics allowing fragment shaders to read the effective fragment size in pixels (gl_FragmentSizeNV) as well as the number of fragment shader invocation for a fully covered pixel (gl_InvocationsPerPixelNV). This opens the road to many new algorithms and more efficient implementations of optimized shading rate techniques, like Foveated Rendering, Lens Adaptation (for VR), Content or Motion Adaptive Shading.

Exclusive Scissor Test

Texture Access Footprint

- VK_NV_shader_image_footprint / GL_NV_shader_texture_footprint (GLSL_NV_shader_texture_footprint / SVP_NV_shader_image_footprint)

Derivatives in Compute Shader

- VK_NV_compute_shader_derivatives / GL_NV_compute_shader_derivatives (GLSL_NV_compute_shader_derivatives / SPV_NV_compute_shader_derivatives)

Two layout qualifiers are provided allowing to specify Quad arrangements based on a linear index or 2D indices.

Shader Subgroup Operations

- VK_NV_shader_subgroup_partitioned / GL_NV_shader_subgroup_partitioned (SPV_NV_shader_subgroup_partitioned)

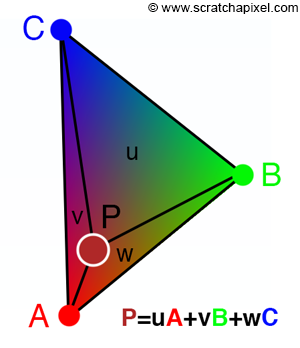

Barycentric Coordinates and manual attributes interpolation

- VK_NV_fragment_shader_barycentric / GL_NV_fragment_shader_barycentric (GLSL_NV_fragment_shader_barycentric / SPV_NV_fragment_shader_barycentric)

|

| Illustration courtesy of Jean-Colas Prunier, https://www.scratchapixel.com/ |

Ptex Hardware Acceleration

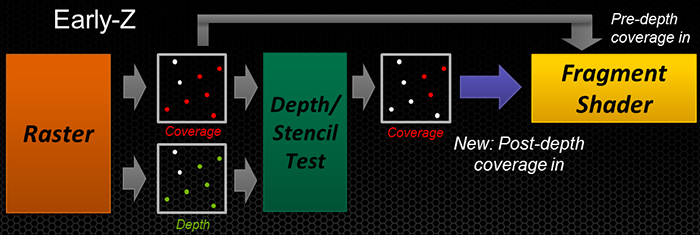

Representative Fragment Test

This extension has been designed to allow optimizing occlusion queries techniques which rely on per-fragment recording of visible primitives. It allows the hardware to stop generating fragments and stop emitting fragment shader invocations for a given primitive as long as a single fragment has passed early depth and stencil tests. This reduced subset of fragment shader invocation can then be used to record visible primitives in a more performant way. This is only a performance optimization, and no guarantee is given on the number of discarded fragments and consequently the number of fragment shader invocations that will actually be executed.

Multi-View Rendering

More info on Multi-View Rendering in this bog post: https://devblogs.nvidia.com/turing-multi-view-rendering-vrworks/